Mashups of Bibliographic Data: A Report of the ALCTS Midwinter Forum

This year the ALCTS Forum at ALA Midwinter brought together three perspectives on massaging bibliographic data of various sorts in ways that use MARC, but where MARC is not the end goal. What do you get when you swirl MARC, ONIX, and various other formats of metadata in a big pot? Three projects: ONIX Enrichment at OCLC, the Open Library Project, and Google Book Search metadata.

Below is a summary of how these three projects are messin' with metadata, as told by the Forum panelists. I also recommend reading Eric Hellman's Google Exposes Book Metadata Privates at ALA Forum for his recollection and views of the same meeting.

ONIX Enrichment at OCLC

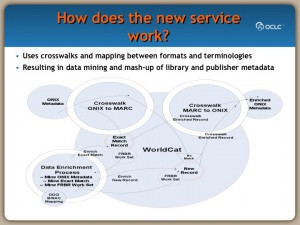

Renee Register, Global Product Manager for OCLC Cataloging and Metadata Services, was the first to present on the panel. Her talk looked at a new and evolving product at OCLC on the enhancement of ONIX records with WorldCat records, and vice versa. ((For those not familiar with ONIX, it is a suite of standards promulgated by EDItEUR for the interchange of information on books and serial publications. It is primarily used as the communication channel between the publishing industry through distribution chains to retail establishments.))

As libraries, Renee said "our instincts are collaborative" but "our data and workflow silos encourage redundancy and inhibit interoperability." Beyond the obvious differences in metadata formats, the workflows of libraries differ dramatically from other metadata providers and consumers. In libraries (with the exception of CIP and brief on-order records) the major work of bibliographic production is performed at the end of the publication cycle and ends with the receipt of the published item. In the publisher supply chain, bibliographic data evolves over time, usually beginning months before publication and continuing to grow for months and years (sales information, etc.) after publication. Renee had a graphic showing the current flow of metadata around the broader bibliographic universe that highlighted the isolation of library activity relative to publisher, wholesaler, and retailer activity.

In her experience, Renee said that libraries need ways to enable our metadata to evolve over time and allow for publisher-created metadata to merge effectively with library-created metadata. The bibliographic record needs to be a "living, growing" thing throughout the lifecycle of a title and beyond. In concluding her remarks, she offered several resources to explore for further information: the OCLC/NISO study on Streamlining Book Metadata Workflow, the U.K. Research Information Network report on Creating Catalogues: Bibliographic Records in a Networked World, the Library of Congress Study of the North American MARC Records Marketplace, the Library of Congress CIP/ONIX Pilot Project, and the OCLC Publisher Supply Chain Website.

From MARC to Wiki with Open Library

The second presenter on the panel was Karen Coyle, talking about the mashup of metadata at the Open Library project at the Internet Archive. The slides from her presentation are available from her website.

Karen said right at the start that the Open Library project is different from most of what happens in libraries -- it is "someone outside the library world making use of library data" -- although the goal is arguably the same as others -- "One web page for every book ever published." As such, the Open Library isn't a library catalog as librarians think of it in that it is not a representation of a libraries inventory. It has metadata for every book it can know about and a pointer to places where the book can be found, including all of the electronic books in Internet Archive (Open Content Alliance, Google Public Domain, etc.) as well as pointers back to OCLC WorldCat. Karen's role for the project is that of "Library Data Informant." The Internet Archive decided that they needed someone who understood library data in order to try to use it. From Karen's perspective, she is trying to be a resource for project but not give them any guidance on how to implement the service. She is curious to see what the project would do when bibliographic data is viewed from a non-librarian perspective. If they have questions, or if they have assumptions about data that are wrong, then she intervenes.

Karen went on to briefly describe the Open Library system. Open Library doesn't have records; rather, it has field types and data properties. In this way, it uses semantic web concepts. "Author" is a type, "Author birthdate" is another type, and so forth. There are no set field types, so if the project gets data from source for which a type doesn't yet exist, it can create a new one. Each type can have data properties such as string, boolean, text, link, etc. Nothing is required and everything is repeatable. Everything -- types, properties, and values -- gets a URI (a URI is an identifier like a URL, but conceptually a superset of the universe of URLs). Titles, authors, subjects, author birthdates, and so on have URIs. Lastly, the underlying data structures are based on wiki principles: all edits are saved and viewable, anyone can edit any value, anyone can add new types or properties, anyone can develop their own displays, etc.

The data that is now in Open Library came from a variety of sources. They started with a copy of books from the Library of Congress, and continue to receive the weekly updates. They performed a crawl of Amazon's book data. They have gotten some from publishers, libraries, and individual users. The last is perhaps the most interesting because it is mainly people outside the western world who are otherwise having trouble getting their works recognized.

Problems, Issues, Challenges, and Opportunities with the Data

People who use library data without the biases or assumptions of librarians come up with interesting ways to view the data. Karen described a few of them.

- Names -

- "These library forms of names? Honestly no one but us can stand them." Even something as simple as the form of last-name-comma-first-name is troublesome. No one else uses this form of the name: Amazon, Wikipedia, etc. In processing these, any information between parenthesis has been deleted, birth and death dates move into separate field types.

- Titles -

- In working with the Open Library developers, this is one place that Karen tried insisting on applying a library practice: knowing the initial article. For us, this is important for sorting books in alphabetical order. The developer response -- why do we have to sort in alphabetical order? "Where else but library catalogs to we see things sorted in alphabetical order? Not in Google, not in Amazon, not anywhere. Alphabetical order is not in the mindset anymore." They also found that the title might include extraneous data. Amazon, for instance, appends the series title in parenthesis to the main title. This is a demonstration of how other communities are not as concerned about strongly typing and separating information into fields. Amazon, of course, has reasons for series information into the main title: it helps sell books.

- Product dimensions -

- Publishers and distributors need to know characteristics of an item such as height, width, depth, and weight; they, of course, need to put it in a box and ship it. Libraries, concerned about placing the item on the shelf, record just height. Recording pagination is different, too: libraries use odd notations "ill. (some col)" and "xv, 200p." versus simply "200 pages."

- Birthdates -

- Librarians use birthdates to distinguish names; if there is no need to distinguish a name, birth and death dates are not added. Someone looking at this from the outside would ask 'Why don't all authors have birth and death dates?' This can be useful information for viewing the context of an item, not just to distinguish author names. Open Library ran author names against Wikipedia to pick up not only birth and death years, but also the actual dates.

- Subject headings -

- Open Library using Library of Congress Subject Headings was out of the question. In processing the data, the Open Library developers just broke them apart into segments and used them. But because they were able to do data mining on the subject field types, they did find statistical relationships between the disassembled precoordinated headings and were able to present those to the user.

- The View of the Data -

- Rather than a traditional library view of long lists of author-title, the Open Library (in its next version coming in February) will have several different views into the mass of data: Authors; Books (what we would call FRBR 'manifestations'); Works; Subjects; and eventually places, publishers, etc. For example, when searching for an author one would get the author page. On it would be all of the works from the author as well as other biographical information. It looks similar to a WorldCat identities page, except it is the actual user interface built into the system. Similarly, every work will have a page, and at the bottom of it one will see all of the editions of the work. Also, each subject will have a page, and one will see a list of works with that subject as well as authors who write on that subject. As Karen said, "The subject itself becomes an object of interest in the database, not just something that is just tacked on to the bottom of the library record."

- Data mining -

- With the data in this format, it is possible to perform data mining actions against it. For instance, simple data mining such as country of publication, popular places that appear, etc. When they had the problem of author names -- knowing when to reverse surname and forname -- they ran the names against Amazon and Wikipedia and retained the ones where they found the order of the entry was the same. The Open Library developers are also experimenting with data mining to find publisher names. Publisher names, of course, vary dramatically, but by using ISBN prefixes they can pull together related items into a "publisher" view.

Karen suggested watching the Edward Betts's site, one of the developers of the Open Library project with an eye on the data mining aspects. She said it is fun to look at our data when it can be viewed from this different point-of-view. She also said to watch out for a new version of the Open Library website coming in February.

Google Book Search Metadata

The final presenter was Kurt Groetsch, Technical Collections Specialist at Google where he works to provide understanding and insight into library partner collections and the digitized books from Google. Kurt said that "Google has been fairly circumspect over the years about what we do on the Book Search project." He said it was a bit of a cultural legacy from the rest of the company and also possibly an artifact of the copyright litigation, but he is hoping to change that. His presentation looked at how Google works with book metadata from three vantage points -- the inputs into Google's system, parsing by Google's algorithms, and analysis and output into the public interfaces.

On the input side, Google is getting bibliographic metadata from over 100 sources in a variety of formats. MARC records are coming from libraries, union catalogs, commercial providers (OCLC), publishers/retails (one publisher supplies records in MARC format). Google also gets ONIX records from commercial providers (such as Ingram and Bowker), publishers, and retailers. Google is especially interested in data from non-U.S. retailers because it is a source of information about books published outside the United States; it helps facilitate discovery of items that they may not otherwise encounter in the publisher and library programs. Google also receives records in a variety of "idiosyncratic formats" -- for example, publisher-contributed metadata (via the Publisher Partner Program); information associating books with jacket images; name authority records (from LC); reviews; popularity signals (sales data as well as anonymized circulation data from some library partners, useful for feeding into the relevancy ranking algorithm); and internally-generated metadata (for instance, whether a book is commercially available or not). Google processes all of this information to come up with a single record that describes a book. At this point they have over 800 million bibliographic records and one trillion bits of information in those records.

All of these records from all of these sources are processed and remixed with Google's parsing algorithms about twice a week. The first step is to transform the incoming records into a "less verbose format" for storage and processing. It is a SQL-like structure that allows elements of the metadata to be queried. Records are then parsed to extract specific bits of information, transform the bits as necessary, and write the information to an internal "resolved records" data structure (a subset of the data coming from the input formats). In the presentation, Kurt had examples of how making inferences from data coming from both MARC and ONIX can be troublesome. Parsing also involves extracting "bibkeys" from the records to aid in matching across sources of data. Four types of identifiers are extracted from bibliographic records: OCLC numbers, LCCNs, ISBNs, and ISSNs. They provide usually useful signals when matching bibliographic and help with assertions that two records describe the same manifestation. Google also tries to parse item data when present in records representing multi-volume works, enumeration and chronology. They will also treat barcode as a form of a "bibkey" if they get it from a library. The parsing algorithm will also split records containing multiple ISBNs representing different product forms (e.g. hardback, paperback, etc.).

With all of this data parsed into records, Google starts its clustering process where records are examined and attached to each other. Bibkeys provide significant evidence for relating records to each other, but bibkeys are not always present in a record (non-U.S. records and older records frequently contain no bibkeys). The algorithms then fall back on text similarity matching using title, subtitle, contributor and other fields such as publisher and publication year. The results are clusters of records representing the same manifestation. An algorithm then attempts to derive the "best-of" record for a single cluster from all of the parsed input records. This is done in a field-by-field voting process based on the trustworthiness of individual fields from record sources.

Kurt went into some of the challenges facing the team building the clustering and best-of record creation algorithms. For instance, in dealing with multivolume works they know of 5 numbering schemas with 3 number types in 15 different languages. Enumeration is now showing in the public display, but the development team is still working with unparsable item data due to inconsistent cataloging practices between institutions...and sometimes inconsistencies within an institution. Another problem is non-unique identifiers. In the current data set ISBN 7899964709 is shared by 75 books and ISBN 7533305353 is associated with 1413 books. There are also poor quality or "junk records". Kurt said his favorite was "The Mosaic Navigator" by Sigmund Freud published in 1939. These are hard to identify with an algorithm, and they rely on reports of problems that enable the developers to go in and "kill" the troublesome record. Another example is a book by Virginia Woolf where the incoming record had conflicting information; it had two 260 fields that contained different dates (1961, correct, and 1900) with fixed field information that strongly suggested that 1900 was the single date of publication. When the data problem is systematic, they can identify it and compensate for it. Kurt's example for this case was "The United States Since 1945" published in 1899. This one was highlighted in Geoffrey Nunberg's criticism of Google Books metadata. In this case, there was a source of metadata from Brazil that when they didn't know the date of publication would use 1899. When Google went back and looked at the date distribution of books there was a huge spike in 1899. Once Google knew about it they were able to go in and kill that information from that source of records. ((A side note: Google isn't the only one tripped up by this. If one searches for the ISBN of the item, 0195038487, you get to more than one site that has the same incorrect publication date. At least Google is attempting to clean up the data!))

In closing, Kurt said that Google is committed to engaging with the library community on improving metadata and metadata processing.

The text was modified to update a link from http://www.niso.org/publications/white_papers/Stream lineBookMetadataWorkflowWhitePaper.pdf to http://www.niso.org/publications/white_papers/StreamlineBookMetadataWorkflowWhitePaper.pdf on January 19th, 2011.

The text was modified to remove a link to http://www.oclc.org/speakers/bios/register_renee.htm on February 11th, 2011.

The text was modified to update a link from http://cip.loc.gov/onixpro.html to http://www.loc.gov/publish/cip/topics/onixpro.html on November 13th, 2012.